Annual 10 Bit Request Thread

Page 1 of 1 (6 posts)

Tags:

None

|

Registered Member

|

Hey all!

In light of the impending release of the Panasonic GH5, which for those of you living under rocks will be the first sub $2k camera to feature internal 10 bit 422 recording in 4K, I think it's time to bring up the issue of getting support for 10 bit (and maybe even higher) color depth support. As it stands, all video is processed at 8 bit, regardless of source depth. You can export 10 bit ProRes, but my understanding is that the extra data is just padded. My question is "where does the conversion to 8 bit occur?". Is this something that has to do with MLT? I peeked through some of the source code for the waveform monitor, and it was clear, at least that, was programmed to be flexible with different bit depths. Where do effects come into play with this? I pulled in some 10bit sample footage and threw on some bezier curves and got banding in the highlights as soon as I pushed it far(the footage was Log). Are (some of?) the effects hard coded to 8 bit? What about transitions? I have a sneaking suspicion that this is a request for the MLT devs, but I figured I'd ask here first. |

|

KDE Developer

|

I think our OpenGL monitor uses RGBA32 textures, so the preview is currently bound to 8bits components.

Moreover many filters (frei0r) and transitions (composite/qtblend/...) operate on RGBA32 conversions too. Not sure about libavfilter & Movit effects. If you don't apply any transition & filter (only cuts), does MLT export file respect the 10bits dynamic? I think you would get a much more precise answer on mlt-devel list. |

|

Registered Member

|

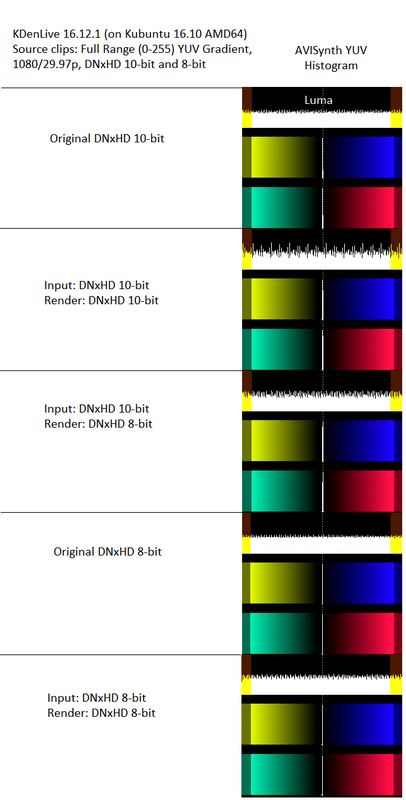

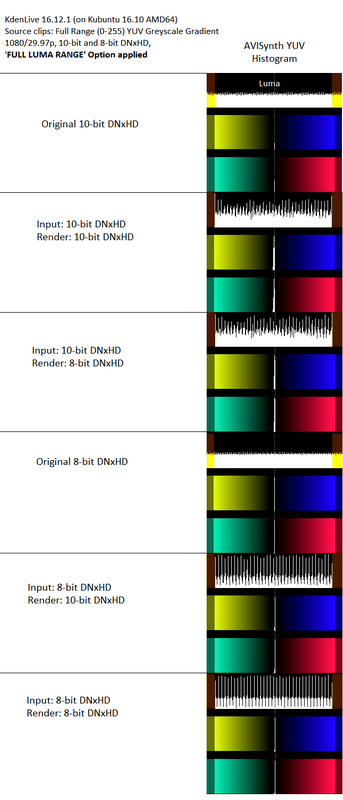

I don't see evidence of 10-bit 'pass-through' on MLT . Just ran a little test with KDenLive 16.12.1 on Kubuntu 16.10 AMD64. For input, I used a two full-range (0-255) YUV greyscale gradient clips (1080/29.97p) that were created with DavInci Resolve, one rendered as 8-bit DNxHD and the other as 10-bit DNxHD. Testing the two inputs separately, I loaded the clip on the timeline and, without applying any effects, rendered out to DNxHD. For the 10-bit DNxHD input I rendered to both 10-bit and 8-bit DNxHD, and for the 8-bit DNxHD input I rendered to just 8-bit DNxHD. I then examined the YUV profiles of the input and rendered files using the AVISynth Histogram filter. Here are the screen shots of the Histograms:  The top Histogram is the Luma (Y) component. The Histogram displays the full (0-255) data range. The vertical brown bars mark the limited (16-235) range boundaries. As you can see, in all cases the full (0-255) range was preserved (passed-through) in the renders. But comparing the 'smoothness' of the gradients, clearly the worst outcome was when 10-bit DNxHD was used for input and output and the best outcome was when 8-bit DNxHD was used for input and output. Had 10-bit depth been passed through on MLT from the 10-bit DNxHD input one would have expected a smoother gradient with the 10-bit DNxHD render. That said, if these were original (native) clips from a camera recording with 'full range' YUV luma, you would not see full-range pass-through. Native 'full range' clips have a pix_fmt specification that flags them as being full range - yuvj42210le in the case of 10-bit 4:2:2 - where the 'j' signifies 'jpeg' scaling. And KdenLive treats these clips in accordance with the default decode behavior of ffmpeg (and so MLT), which is to compress (scale) the full luma range to 16-235 range - so called 'Limited' or 'Broadcast' range. And it is in that compressed form that the imported frames are passed through MLT each with it's own format attached, converting to and from the particular formats required by the different effects and transitions - and, for the most part, that involves conversion to and from RGB via applied Rec709 color matrix coefficients. As such, the compressed 16-235 range persists through to export unless the render format is one that can be configured to scale back out to full 0-255 range. That is possible with x264 because libx264 permits setting the equivalent 'full range' pix_fmt specification. So you could for example modify the existing 'Lossless H264' (i.e. x264 Hi 4:4:4 Predictive Intra) profile specifying yuvj444p as the pix_fmt, or else configure an equivalent lossless intra x264 profile for 4:2:0 or 4:2:2. But that's 8-bit only, unless someone has managed to compile a build with 10-bit x264 encoding. As for other 'edit intermediate' formats, like DNxHD and ProRes - there we do have 10-bit rendering but SWScale does not respect the full range yuvj422p10le pix_fmt and defaults to yuv422p10le on encoding - so with these formats the rendered output will always be compressed 16-235 range. For 'non-native' clips that have full-range luma but lack the 'full range' pix_fmt flag we do have the 'Full Luma Range" option in Clip Properties which forces the input to be treated like a native full range clip. So I repeated the above tests with the same DNxHD greyscale gradient clips, this time applying the 'Full Luma Range' option. And, as expected, what we see on examination of the DNxHD renders is compression to 16-235 range:  Now we see some benefit from 10-bit input, with markedly less spiking/discontinuity in the render gradients. Unfortunately I didn't save the original VirtualDub frame shots that show the degree of 'banding' but I think it is obvious from the histograms that it was much more severe with the 8-bit inputs. As I understand, when converting 10-bit to 8-bit, ffmpeg does apply dithering by default, so it's probably reasonable to assume that MLT does the same when importing 10-bit formats. But once imported, all processing is in 8-bit so it's hardly surprising that you are seeing 'banding', and likely other posterization effects, when pushing your log footage around. So yes, I too would welcome 10-bit processing in KDenLive, and not only for 10-bit sources. In my experience with systems that natively process in 10-bit (like DaVinci Resolve), transcoding native 8-bit HD-AVC footage to 10-bit intermediate input formats does benefit scaling precision. IMHO though, a more important and pressing need is for proper handling of full-range sources. Compression to 'broadcast range' is arguably a better outcome than clipping, but there really needs to be an option for processing and exporting at 'full-range' levels as well - like Vegas has 'Studio RGB' and 'Computer RGB' and DaVinci Resolve has 'Data' and 'Video' levels. As it stands though the only way I can see either becoming a reality is if there is someone with a vested interest, able and willing to champion the cause at the MLT development level. Maybe, just maybe you are destined to be "the One" Aquatic Gateway  And if you are not, then I fear you will be making the same 'annual request' this time next year. Anyhow, let us know how you get on. And if you are not, then I fear you will be making the same 'annual request' this time next year. Anyhow, let us know how you get on. Meanwhile, if you are going for a GH5 and are on Windows (which I think we can talk about freely now that there is a Windows port) or a Mac, I'd suggest looking at DaVinci Resolve 12.5, which is free for anyone prepared to learn how to use it. You won't find anything in Linux at this time that comes close to meeting your needs, sorry to say. One other thought - I'm assuming the GH5 will, like the GH4, give choice of the luminance range - full range (0-255), extended (16-255) or 'broadcast' limited (16-235) range. So you could at least avoid the compression that will occur in KDenLive with full range clips by shooting extended or limited range. Extended (16-255) range however will get clipped to limited 16-235 range when effects/transitions are applied. Also maybe check out a related and equally boring post I made the other day that illustrates what the KDenLive WFM actually displays. Important to know, especially if you are processing full range material. viewtopic.php?f=265&t=138529 |

|

Registered Member

|

Interesting to see this though:

https://www.reddit.com/r/linux/comments ... _released/ Never used Blender myself. |

|

Registered Member

|

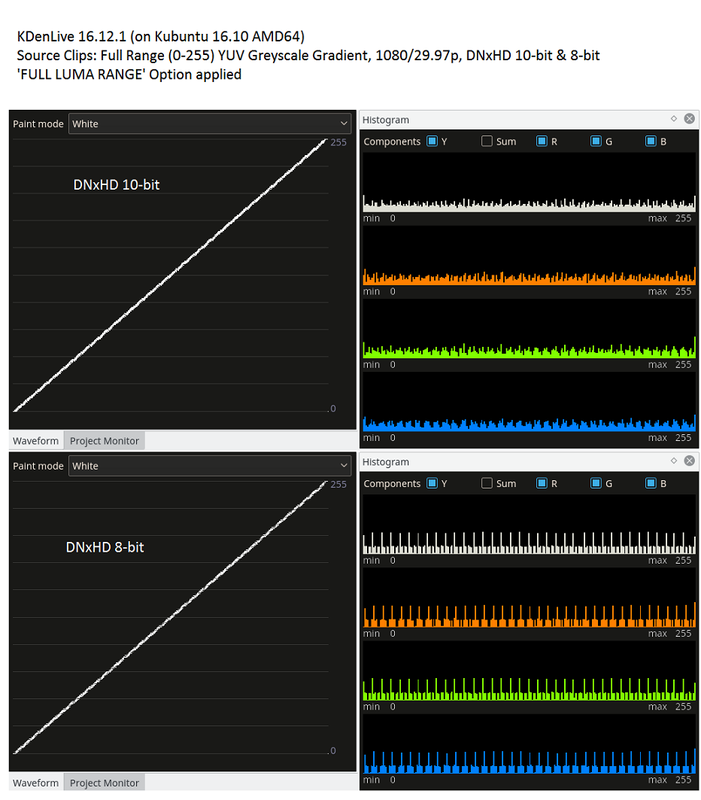

The VirtualDub frame shots (cropped and stacked) to show the relative degree of 'banding' in the rendered gradients: http://imgur.com/a/g92Jg And here are screen shots of the KDenLive WFM and Histogram plots generated with the 10-bit and 8-bit DNxHD Greyscale Gradient clips on the timeline and the 'Full Luma Range' option applied:  Note that the luma (Y) values for the WFM and Luma Histogram are derived indirectly by Rec709 coefficients applied after conversion to RGB. So although the WFM and Luma Histogram are scaled 0-255, what they actually represent is compressed 16-235 range. The 0-255 scaling for the R,G and B channel Histograms however is valid. So yes, with 10-bit full range inputs there is greater precision in the compression to 16-235 range that occurs on import, but processing and exporting in this compressed form is really not the best situation at all. The compressed 16-235 luma cannot be internally scaled back to full 0-255 using the Levels effect; all that brings about is clipping (clamping) to 16-235 instead, with more scaling imprecision introduced in the process. That's why I think the greater priority is provision for importing, processing and exporting full range material at full (data) levels. If MLT works with ffmpeg -vf filters, feasibly the compression that occurs with native full range clips could be avoided using the merge planes filter to modify the decode output so that the full range is passed-through without deference to the 'full range' pix_fmt flag. Once imported at full data levels, surely then it's largely a matter of applying the respective transfer coefficients. Maybe others can read code, but I'm not a programmer and don't know what's possible with MLT. That's why it needs someone who can address these needs at the MLT level. All I can do is bring attention to the fundamental issue based on the evidence in working practice.

Last edited by Inapickle on Mon Jan 23, 2017 1:08 pm, edited 3 times in total.

|

|

Registered Member

|

And I see that periodic requests for high bit depth processing have been cropping up for nigh on 8 years now:

viewtopic.php?f=265&t=112573&p=270839&hilit=mlt+10+bit#p270839 Has the perspective changed on that ? |

Page 1 of 1 (6 posts)

Bookmarks

Who is online

Registered users: Bing [Bot], Google [Bot], kde-naveen, Sogou [Bot], Yahoo [Bot]